3D Model Guided Endodontics: A New Technique Utilizing Augmented Reality and Artificial Intelligence

By Dr. Bobby Nadeau

The pace of technological advancement in the fields of augmented reality and artificial intelligence is increasing rapidly. The emergence of more powerful computers and a better understanding of machine learning is now allowing new possibilities in clinical Endodontics. Augmented reality (AR) is a technology that overlays digital information, such as images, text, or 3D models, onto the real world in real time, typically viewed through a smartphone, tablet, smart glasses or dedicated AR headsets.

Artificial intelligence (AI) is the development of computer systems capable of performing complex tasks that typically require human intelligence much more efficiently. The main subfields of AI include machine learning, deep learning and computer vision. Machine learning include systems that improve from experience and data without being explicitly programmed. Deep learning is a subset of machine learning using multi-layered neural networks. Computer vision allows for the interpretation and understanding of visual information.

Guided Endodontics

The concept of image guided Endodontics (IGE) was first described by Clark and Khademi in 2010 (1). IGE can be defined as a minimally invasive, precision-driven philosophy and technique for locating root canal systems using 3D imaging as the primary guide for access cavity design, rather than relying solely on the traditional 2D radiography and average anatomical knowledge.

The emergence of computer guided Endodontics now allows clinicians to use real-time 3D imaging, preoperative planning software, and intraoperative tracking systems to precisely guide surgical instruments during an operation. This is particularly useful in cases of canal calcification and microsurgeries. The benefits of guided surgery in general include more minimally invasive and efficient procedures and the potential for enhanced post-operative healing and outcomes. Computer guided Endodontics carries a more complex setup and involves a significant financial investment and steep initial user learning curve.

3D Model Guided Endodontics

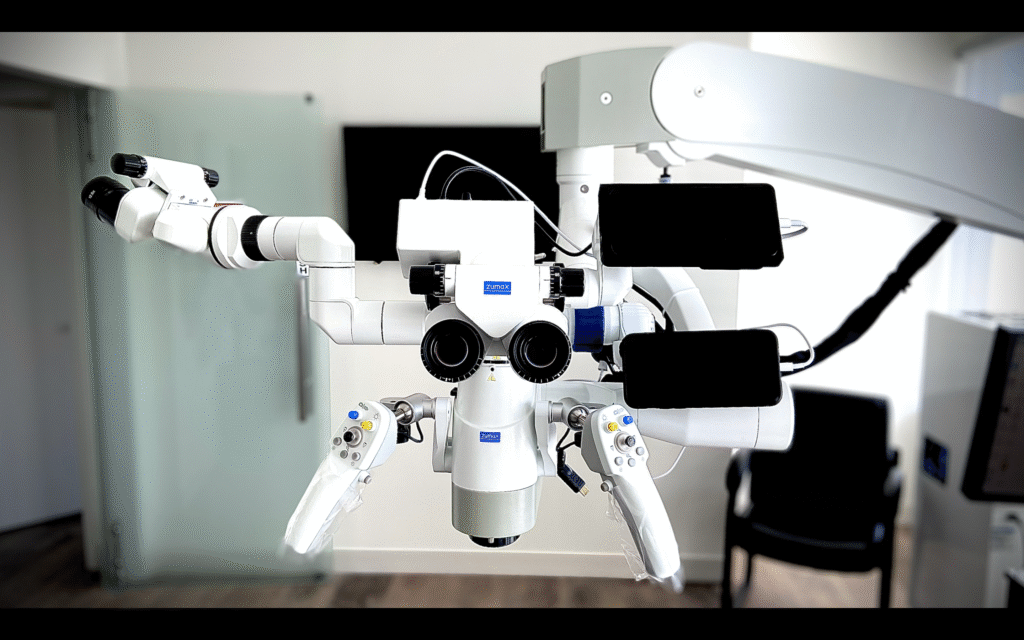

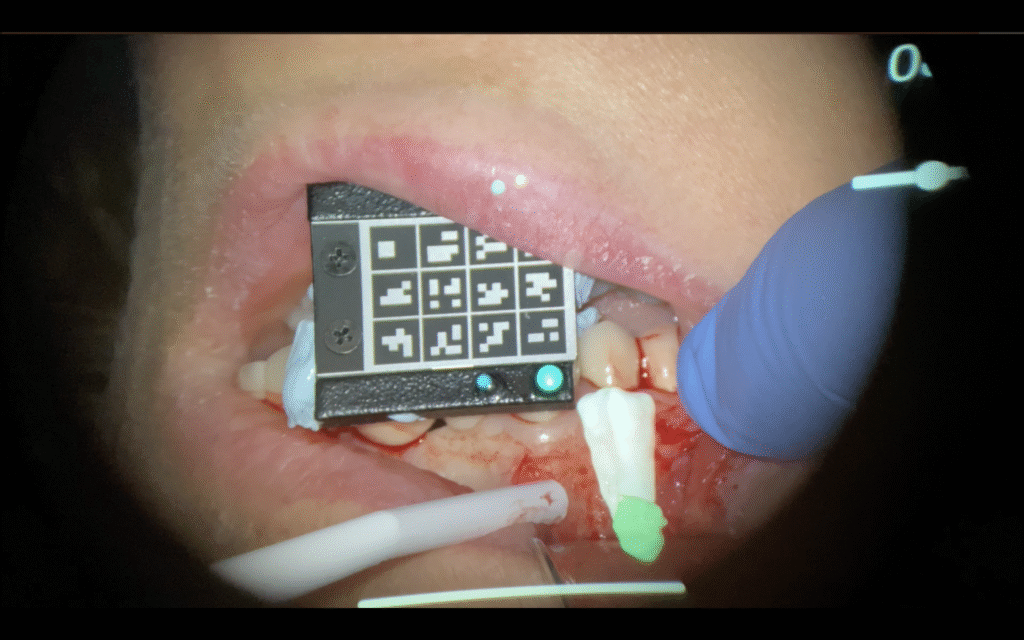

This technique involves the use of pre-operative CBCT data to produce a 3-dimensional segmented model of different dental structures. The author uses Relu Creator (Belgium), a browser-based software that utilizes AI, mainly convolutional neural networks, to produce segmented 3D models of different structures from CBCT data. This software allows the automatic segmentation of structures such as hard tooth structure, canal spaces, bone, sinus cavities and inferior alveolar nerve canal. The final segmented 3D model can be exported from Relu Creator and imported into a smartphone application which allows viewing and manipulating the 3D model. The smartphone is mounted onto the surgical operating microscope using a custom smartphone adapter (Zumax Medical, China) as shown in figure 1. The smartphone is connected to the DentSight AR Heads-up Display module (Zumax Medical, China) through HDMI connection (Fig 1). This allows the injection of the segmented 3D model into the field of view of the microscope. This augmented reality setup allows the clinician to view deeper structures not visible to the naked eye (root dentin, osseous structures, canal space) overlayed in real time on top of the real patient anatomy as seen through the microscope. The clinician can manipulate the 3D model in real time directly onto the smartphone to orient and position it in the desired position.

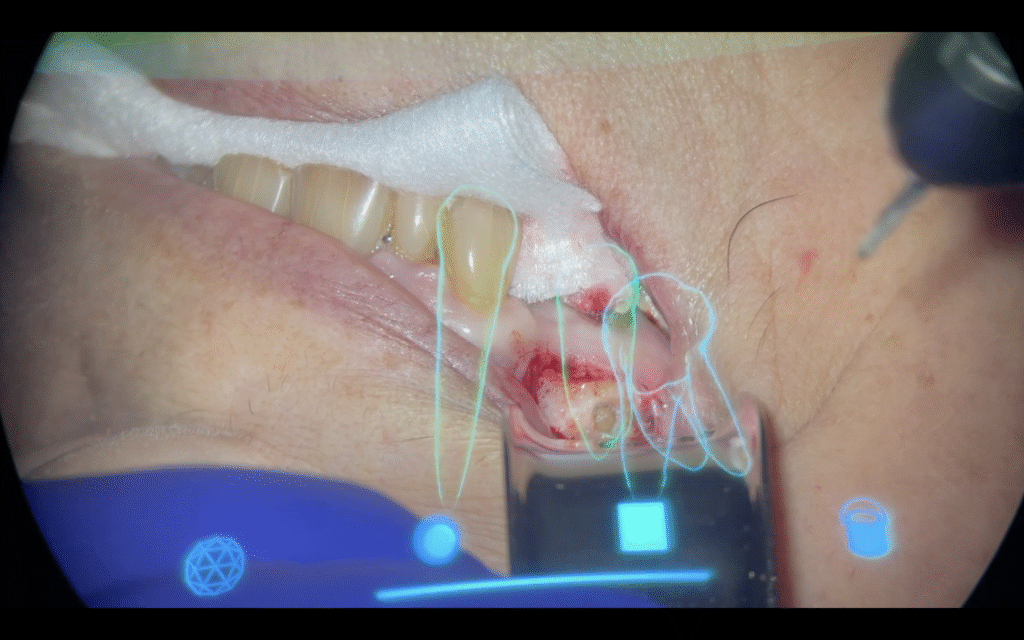

Figure 1

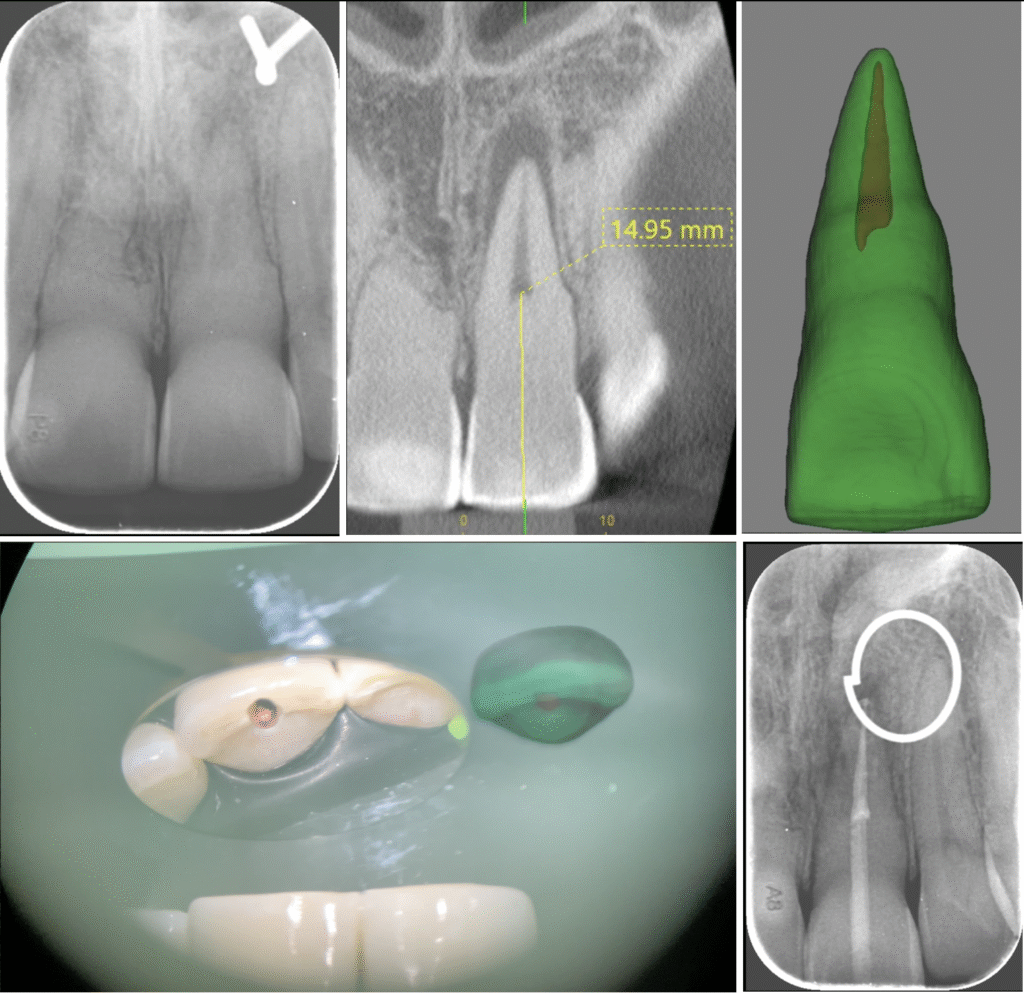

Non Surgical Case

This patient presented with an upper central anterior with pulpal necrosis, symptomatic apical periodontitis and a receded canal (Fig 2). A CBCT was taken and a 3D model segmenting the hard tooth structure and canal space was produced using Relu Creator. The 3D model was overlayed within the clinical field of view as seen through the microscope using the setup described above. The 3D model was positioned in the same orientation as the real anterior tooth as seen through the indirect vision of the dental mirror. The access cavity is performed, guided by the 3D model, and the root canal treatment subsequently completed. Previously, using CBCT data, the clinician would be required to manually scroll up and down the different slices of the scan in order to assess the relationship between the canal position and the external form of the crown of the tooth to plan the access adequately. The benefit of 3D model guided Endodontics is that, for the first time, the clinician can obtain the relationship between the canal space and the outer form of the crown of the tooth all within one view. The augmented reality setup allows the clinician to maintain focus on the surgical field without having to look away at an external monitor.

Figure 2

3D Model Guided Endodontics for Microsurgery

The same technique described above can be utilized to overlay hard structure over the patient’s gingiva to plan flap designs and root resections during Endodontic microsurgeries. Figure 3 shows the outline of the hard tooth structure overlayed on the patient’s jaw as seen through the microscope by the operator.

Figure 3

Summary

The 3D model guided technique using the DentSight AR Heads-Up Display module and Artificial Intelligence based software has the potential to give clinicians real time visual guidance during Endodontic procedures. As opposed to more complex computer guided systems, this technique is more cost effective and easily integrated into the microscope based workflow. The future of 3D model guided Endodontics will utilize computer vision with object recognition and object tracking to ensure the segmented 3D model remains overlayed in the appropriate position/orientation despite patient movement during the procedure (Fig 4).

Figure 4

References

1- Clark JR, Khademi JA. Modern molar endodontic access and directed dentin conservation. Inside Dentistry 2010;6(8):58-71